.: Natural Language Processing

-

ISIT's Carolyn Meinel explains how she used Natural Language Processing to forecast geopolitical questions in a webinar hosted by Basis Technology: Mirror, Mirror on the Wall -- which Country Will Rule them All?

-

Natural Language Processing (NLP): part of the solution to the validity and usability crisis in COVID-19 research papers?

-

Problem Statement: The peer review system already was strained before COVID-19. Unknown for most of 2019, the number of papers associated with COVID-19 has approached 4,000 a week by the middle of 2020. It then asks the impossible: for the sake of global security and health, researchers should accept more peer reviews, perform them both more meticulously, and rapidly. Already, this rush of papers includes some that have influenced public policy and reached global audiences only to be later retracted. Some of these retractions occurred in top journals, e.g. Lancet and New England Journal of Medicine. On the publication side, researchers are submitting work much more readily to preprint servers (which are not peer-reviewed) at such a rate – and with attending problems around low accuracy and high public danger – that some outlets have refused to accept COVID-19 related preprints at present. Finally, peer review often fails to identify underpowered studies, statistical errors, and questionable data. These factors in combination frequently mislead researchers, policy makers and the public, thus worsening the pandemic. This lack of capacity to adequately assess the flood of incoming data is shared by the IC’s analytic and many other communities researching other domains.

-

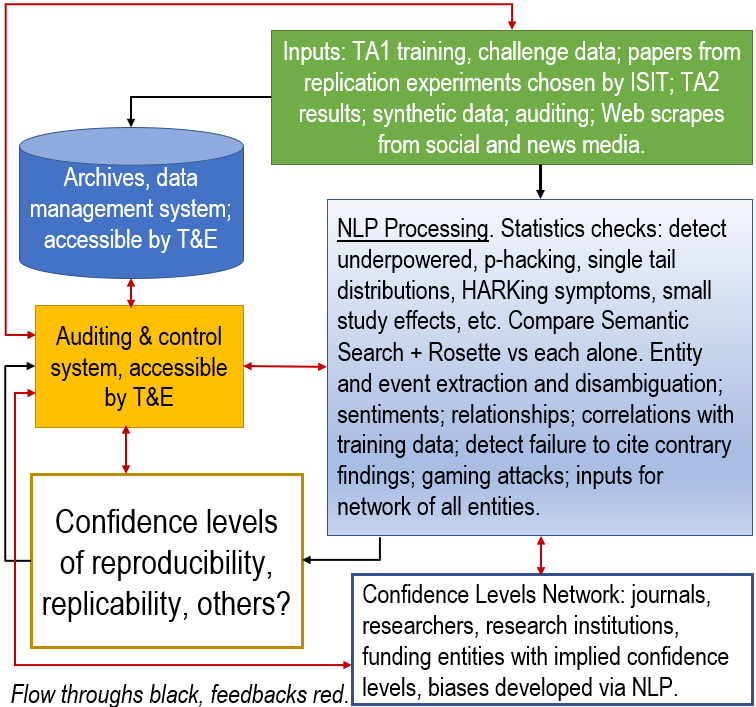

As a part of this effort, ISIT's Carolyn Meinel is collaborating with researchers at Basis Technology and KaDSci, LLC to explore the potential of their new text vectors NLP system, Semantic Studio. The figure below is a preliminary architecture diagram of how we hypothesize that we could achieve high accuracy in predicting which COVID-19 papers could be replicated.

-

-

Preliminary findings suggest that NLP can reveal the difference between papers that can be replicated vs papers that will fail replication, a key element in determining whether a COVID-19 research paper is are valid.

-

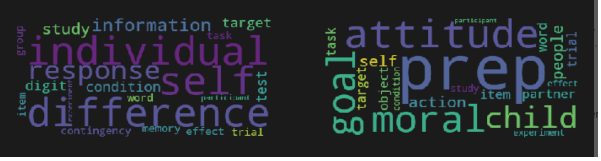

Pictured below, Dr. Alex Jones' word cloud depiction of his Naive Bayes analysis of the 100 replicated papers vs papers that failed replication from Colin F. Camerer, et al, “Evaluating the replicability of social science experiments in Nature and Science between 2010 and 2015,” Nature Human Behaviour, Volume 2, pp. 637–644 (2018). https://www.nature.com/articles/s41562-018-0399-z

This word cloud depiction shows how obviously different they are on average. The challenge is to narrow down which individucal research papers could almost certainly be replicated vs those that cannot. Dr. Jones' approach correctly identified 80% of these papers. The architecture diagram above was how my colleagues and I hypothesize that we might improve on Jones' impressive results;